Train Yourself – Sharpen Your Senses

Verification is not just about tools. Essential are our human senses. Whom can we trust, if not our own senses?

Digger Deepfake Detection

The Digger project aims to use both visual verification and audio forensic technologies to detect both shallow fakes as well as deepfakes or synthetic media as we call it..

Shallowfakes are manipulated audiovisual content (image, audio, video) generated with ‘low tech’ technologies like Cut & Paste or speed adjustments which, often taken out of context, is extremely convincing.

Deepfakes / Synthetic Media are artificial audiovisual content (image, audio, video) generated with technologies like Machine Learning which is extremely realistic.

In-Depth Interview – Sam Gregory

Sam Gregory is Program Director of WITNESS, an organisation that works with people who use video to document human rights issues. WITNESS focuses on how people create trustworthy information that can expose abuses and address injustices. How is that connected to deepfakes?

Audio Synthesis, what’s next? – Parallel WaveGan

The Parallel WaveGAN is a neural vocoder producing high quality audio faster than real-time. Are personalized vocoders possible in the near future with this speed of progress?

In-Depth Interview – Jane Lytvynenko

We talked to Jane Lytvynenko, senior reporter with Buzzfeed News, focusing on online mis- and disinformation about how big the synthetic media problem actually is. Jane has three practical tips for us on how to detect deepfakes and how to handle disinformation.

From Rocket-Science to Journalism

In the Digger project we aim to implement scientific audio forensic functionalities in journalistic tools to detect both shallow- and deepfakes. At the Truth and Trust Online Conference 2020 we explained how we are doing this.

Audio Synthesis, what’s next? – Mellotron

Expressive voice synthesis with rhythm and pitch transfer. Mellotron managed to let a person sing, without ever recording his/her voice performing any song. Interested? Here is more…

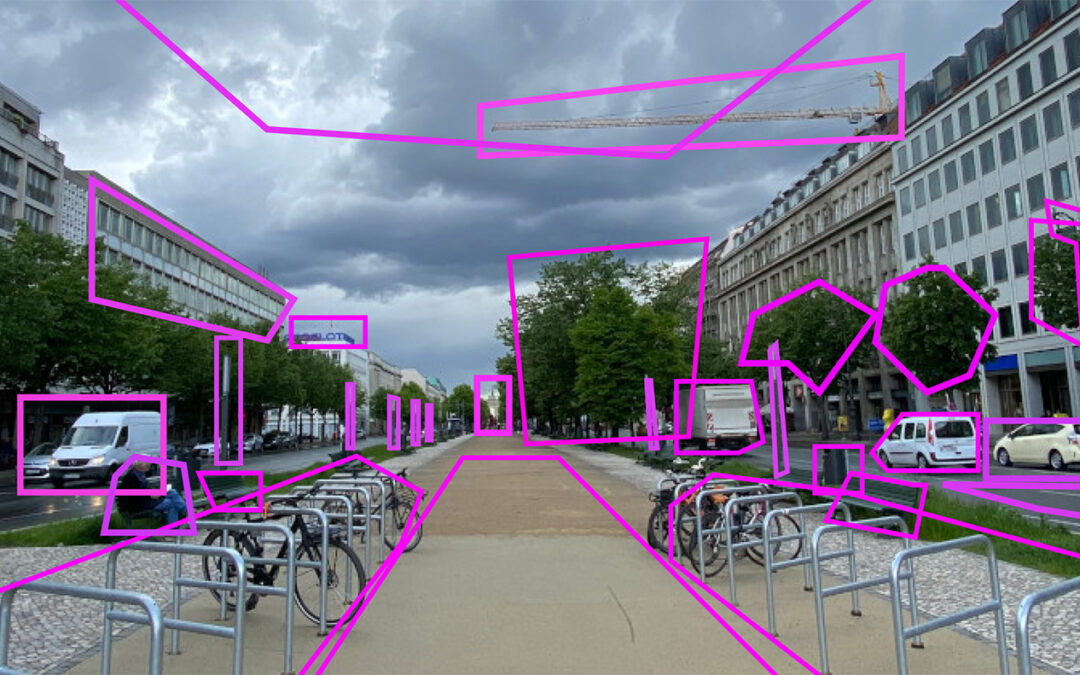

Video verification step by step

What should you do if you encounter a suspicious video online? Although there is no golden rule for video verification and each case may present its own particularities, the following steps are a good way to start.

The dog that never barked

Deepfakes have the potential to seriously harm people’s lives and to deter people’s trust in democratic institutions. They also continue to make the headlines. How dangerous are they really?

ICASSP 2020 International Conference on Acoustics, Speech, and Signal Processing

Here is what we think are the most relevant upcoming audio-related conferences. And which sessions you should attend at the ICASSP 2020.

Who We Are

Digger is a project by Deutsche Welle, Fraunhofer IDMT and ATC. Learn more about our team here. The project is made possible by the Google DNI fund.

Get Involved

Your opinion and expertise matter to us.

Please get involved via comments on the articles here or via Twitter. Thanks!

Follow Us

We would love to see you on Twitter!