Sam Gregory is Program Director of WITNESS, an organisation that works with people who use video to document human rights issues. WITNESS focuses on how people create trustworthy information that can expose abuses and address injustices. How is that connected to deepfakes?

In-Depth Interview – Jane Lytvynenko

In-Depth Interview – Jane Lytvynenko

We talked to Jane Lytvynenko, senior reporter with Buzzfeed News, focusing on online mis- and disinformation about how big the synthetic media problem actually is. Jane has three practical tips for us on how to detect deepfakes and how to handle disinformation.

Jane Lytvynenko, is a senior reporter with Buzzfeed News, based in Canada. She is primarily focusing on online mis- and disinformation. You can check out her work here. We talked to Jane about how big the synthetic media problem actually is and Jane provides us with practical tips on how to detect deepfakes and how to handle disinformation.

Jane, what is your definition of a deepfake?

Oh, that is a tricky one. I mean it is very different from a video that was just slowed down or manipulated in a very basic way e.g. cut&paste of scenes.

For me a deepfake is using computer technology to make it look like a person said something or took an action they did not say or do.

Where do you mostly encounter deepfakes at the moment?

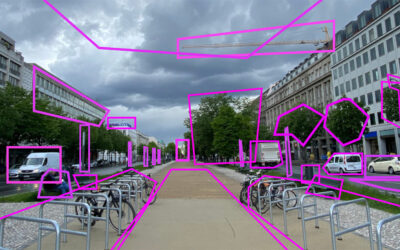

We do not encounter deepfakes a lot on the day-to-day. Because the technology is not widely accessible at the moment, we are much more worried about cheapfakes than we are about deepfakes. They spread much faster. We do find deepfakes mostly in satire. We have seen a lot of that come up in the last little while. We also see GAN (Generative Adversarial Networks) generated images being used for fake personas. Essentially faces generated by a computer that are being used to present a persona on social media that doesn’t actually exist in real life.

Do you expect an increase of video synthetic media ahead during the US elections?

No. The reason why I say no is because deepfakes are still fairly difficult to create for people who do not have a lot of tech knowledge. But cheapfakes you can make in iMovie. You can make things using very basic tools that are also convincing.

There is of course always a fear in the back of my head of the ‘Big One’. What is going to be the big deepfake that fools everybody?

Another fear that I have, is a deepfake inserted among legitimate videos. Using one small portion rather than the whole video being a deepfake.

Where do deepfakes have their biggest impact?

Right now, we see deepfakes mostly used for harassment of women, in pornography in particular. They are primarily targeted at women, but sometimes also at men. Deepfake technology is also being used in movies in Hollywood. And like I mentioned previously for sort of high level production satire.

But when it comes to the field of politics, we’ve seen a couple here and there but in North America we haven’t seen deepfakes that are so convincing that they uproot the political conversation.

Should journalists be concerned?

Do all journalists need to be trained in verification? Or is that a task for experts?

I think it needs to be both. In order to send something to a researcher you need to first understand what it is you are looking for and why you are sending it to a researcher.

So, we need to have basic training. We need reporters to understand what a deepfake looks like. What a GAN-generated image looks like. Get them in on the basics of verification of all types of content. If something extremely technical comes up, they can just send it to the researchers.

For journalists it is very important to have that source. It is very important to have an expert opinion for second verification. That is part of the practice of journalism. But if you do not know to ask the right question, you are not going to be able to get that second opinion.

What are the tools you still miss?

There are a few things. The biggest problem is social media discovery, especially when it comes to video. If there is a video going viral it is fairly difficult to trace back where it came from. There are some tools that break video down into thumbnails. You can then reverse image search them and try to find your way back. But for me right now, there is no way to tell where the video originated. Part of that is a lack of cross-platform searches. For example, Instagram stories; it is one of the most popular Facebook products right now, but they disappear within 24 hours. If somebody downloads that Instagram story and cuts off the banner that says who posted it, they can upload it to Twitter or Facebook, and I will not know where the video came from. It doesn’t allow reporters to see the bigger picture.

Right now, we do not necessarily have the tools to both look at video cross-platform and to look at these videos in terms of when they were shot, what time, by whom, from what angle, at what location. It is a challenge that requires a lot of time that reporters just do not have.

The other thing is, we do not really have a strong way of mapping video spread. So, when reporters do content analysis, they generally focus on text. The reason for that is because text is machine-readable. We have the tools to sort of map out the biggest account that posted this, the smaller accounts that came from it and the sort of audience that looked at this. We do not have similar tools for video even though analyzing the spreading of information is one of the most useful things we do as disinformation reporters. It allows us to see the key points where disinformation traveled. It allows us to understand where to look next time. It allows us most importantly to understand which communities were most impacted.

Is collaboration with researchers and platforms essential in fighting disinformation?

Definitely collaboration with researchers. Platforms are a bit on and off again in terms of what kind of information they are willing to provide us with. Sometimes they are willing to confirm fact findings, but they are rarely helping us to do research independently.

This is where researchers, analytics, sort of third parties that specialize in this are really key for reporters.

How should we report about disinformation and deepfakes?

At Buzzfeed News we always try to put correct information first. We repeat the accurate information before you get to the inaccurate information. There are two different approaches you can take. One is reporting on the content of the video and the other is reporting on the existence of the video as well as any information you have in terms of where it came from, who posted it and why.

We generally focus on the second approach as the primary presentation of facts.

That is how we frame a lot of these things. Because the key aim of a manipulated video is to get the message across and if we put the message at the top then they still get the message across. What you want to do is describe the techniques, describe how they are attempting to manipulate the audience. And then explain the other part of manipulation which is the message.

Do you have a specific workflow in verifying digital content?

We do have best practices. Putting accurate information first is definitely the top priority. We also make sure to never put up an image or a screenshot without stamping it or crossing it out in some way. That gives a visual clue to anybody who comes across it that it is false. But more importantly, if a search engine scrapes that image and somebody comes across it on Google or Bing they are immediately able to see that it is false.

In terms of workflow for verification, the key part is documentation. We archive everything that we come across and take a screenshot. We are essentially making sure that we are able to retrace our steps. It is kind of a scientific process, we want to make sure that if anybody repeated our steps, they would come to the same conclusion. A lot of the times when we do pure debunks that is what we focus on. Because not only does it increase trust and shows how we got to the conclusion, it also teaches our audience some of the techniques that we are using so that they can use them in the future as well.

Can you still trust what you are seeing, or do you always have this critical view?

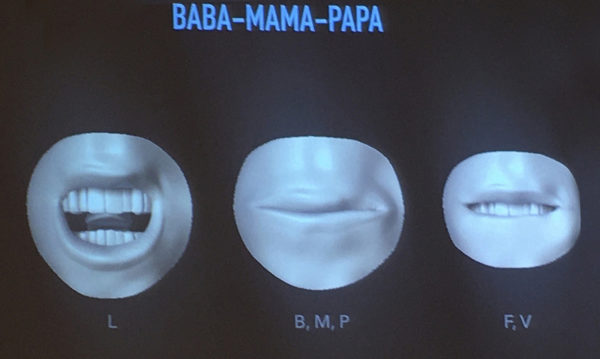

The short answer is yes, especially with a lot of videos. I really fear missing something because sometimes the manipulation is so subtle you can’t quite tell that it is a manipulation from the first or the second look. If somebody is just scrolling on their feed, they are not looking very closely at those details. They might not even listen to the audio and hear that it sounds off. They might read the subtitles instead. They might not notice that the mouth in a deepfake is a little bit imperfect because those are little details. We are bombarded with information in our news feed so we might just not notice it.

I’m always extremely suspicious and sometimes I’m more suspicious than I should be, sometimes I look at a video and I’m like was that slowed down by 0.7 of a second or am I losing my mind?

What are the three main tips for a news consumer to detect synthetic media?

Tip #1

My first tip, if you see a video that is extremely viral or if you see video that sparks a lot of emotion or if you see a video that just kind of like feels a little bit off just pause.

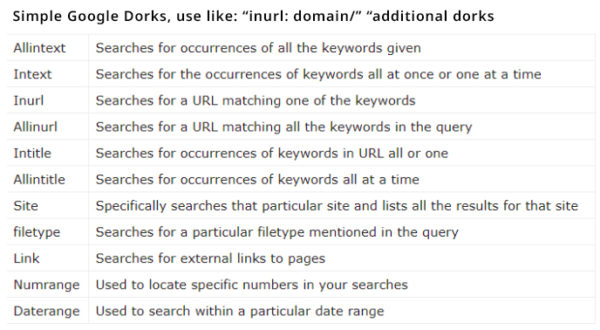

From there the number one thing you can do is search a couple of key terms in a search engine with the words fact check to see if somebody has already picked up on what you are seeing. You can also read the comments, very often in the comments people will explain what is going on in the video.

Tip #2

If you are unsure about the video really do play the audio and look at the key features that make people people. Look at the eyes, look at the mouth. What are the mannerisms of the person that you are seeing in the video and do they match with what you understand about that person? Ask yourself if the voice of the person sounds like their real voice.

A lot of people when they see a GAN-generated photo for example, they have a gut feeling that something is wrong. They feel that they are not looking at a real person, but they can’t quite explain why. So, just really trust that feeling and start looking for those little signs that something is wrong. If it is a photo usually the best thing to look at are the earlobes. If a person has glasses look at the glasses. Eyebrows are not generally perfect if a photo is computer-generated and teeth are always a little off. Those are the things that I would look for.

Tip #3

The final tip is: do not share anything you are not sure of. Do not pass it on to your network.

We all have created a small online community around us, whether it is friends, family, acquaintances or sort of strangers that we met on the internet. And most of that community really trusts us. Even if you are not a public figure your friends are going to trust what you post. So, take that responsibility seriously and try to not pass on anything that you are unsure of to that online community.

When not deepfakes, what else would be our challenge in disinformation?

My biggest worry when it comes to disinformation is not necessarily synthetic media. It is humans trying to convince other humans.

Look at the most insidious falsehoods that we see right now in the US; the QAnon mass delusion like we call it at Buzzfeed. People who believe in this are very often brought on board by other people they know. So, what I really worry about is the continuing creation of online communities where people bring one another along for the ride except the ride is extremely false.

I think that manipulated images and manipulated videos and fake news articles are all just tools. They are all parts of the problem. But the problem itself I think is a community problem and I definitely foresee that community problem growing beyond synthetic media.

Many thanks Jane! If you are interested to learn more or have questions then please get into contact with us, either via commenting on this article or via our Twitter channel.

We hope you liked it! Happy Digging and keep an eye on our website for future updates!

Don’t forget: be active and responsible in your community – and stay healthy!

Related Content

In-Depth Interview – Sam Gregory

Audio Synthesis, what’s next? – Parallel WaveGan

The Parallel WaveGAN is a neural vocoder producing high quality audio faster than real-time. Are personalized vocoders possible in the near future with this speed of progress?

In-Depth Interview – Jane Lytvynenko

We talked to Jane Lytvynenko, senior reporter with Buzzfeed News, focusing on online mis- and disinformation about how big the synthetic media problem actually is. Jane has three practical tips for us on how to detect deepfakes and how to handle disinformation.