Verification is not just about tools. Essential are our human senses. Whom can we trust, if not our own senses?

Video verification step by step

Video verification step by step

What should you do if you encounter a suspicious video online? Although there is no golden rule for video verification and each case may present its own particularities, the following steps are a good way to start.

What should you do if you encounter a suspicious video online? Although there is no golden rule for video verification and each case may present its own particularities, the following steps are a good way to start.

Pay attention and ask yourself these basic questions

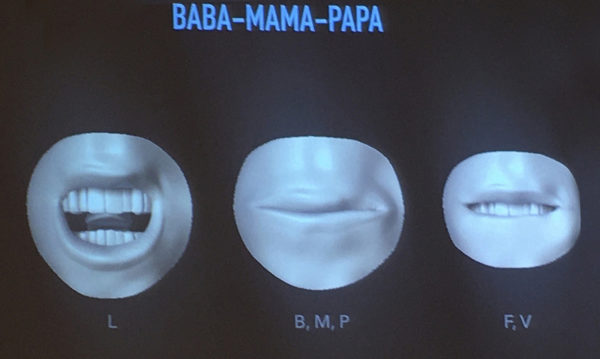

Start with asking some basic questions like “Could what I am seeing here be true?”, “Who is the source of the video and why am I seeing/receiving this?”. “Am I familiar with this account?”, “Has the account’s content and reporting been reliable in the past?” and “Where is the uploader based, judging by the account’s history?”. Thinking the answers to such questions may raise some red flags about why you should be skeptical towards what you see. Also, watch the video at least twice and pay close attention to the details; this remains your best shot for identifying fake videos, especially deepfakes. So, careful viewers may be able to detect certain inconsistencies in the video (e.g. non-synchronized lips or irregular background noises) or signs of editing/manipulation (e.g. certain areas of a face that are blurry or strange cuts in the video). Most video manipulation is still visible by the naked eye. If you want to read more on how to deal with dubious claims in general, you can read our previous blog post.

Capture and reverse search video frames

When encountering a suspicious image, reverse searching it on Google or Yandex is one of the first steps you take in order to find out if it was used before in another context . For videos, although reverse video search tools are not commercially available yet, there are ways to work around that, in order to examine the provenance of a video and see whether similar or identical videos have circulated online in the past. There are many tools like Frame-By-Frame that enable users to view a video frame-by-frame, capture any frame and save it – if you have the VLC player installed it works as well.

Cropping certain parts of a frame or flipping the frame (flipping images is one method disinformation actors use to make it more difficult to find the original source through reverse image search) before doing a reverse search may sometimes yield unexpected results. Also, searching in several reverse search engines (Google, Yandex, Baidu, TinEye, Karma Decay for Reddit, etc.) increases the possibility of finding the original video. The InVID-WeVerify plugin can help you verify images and videos using a set of tools like contextual clues, image forensics, reverse image search, keyframe extraction and more.

Examine the location where the video was allegedly filmed

Although in some instances it is very difficult or nearly impossible to verify the location where a video was shot, other times the existence of landmarks, reference points or other distinct signs in the video may reveal its filming location. For example, road signs, shop signs, landmarks like mountains, distinct buildings or other building structures can help you corroborate the video’s filming location.

It‘s @Quiztime ?

— Julia Bayer (@bayer_julia) May 11, 2020

?Was this photo taken before the start of the lockdown or after it was eased?

? Reply to just me with your answer

? Reply to all for collaboration &

?? Good luck with the #MondayQuiz pic.twitter.com/iHsMFhXqF9

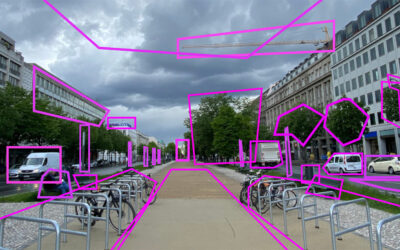

Tools like Google Maps, Google Street View, Wikimapia, and Mapillary can be used to cross-check whether the actual filming location is the same as the alleged. Checking historical weather conditions for this particular place, date and time is another way to verify a video. Shadows visible in the video should also be cross-checked to determine whether they are consistent with the sun’s trajectory and position at that particular day and time. SunCalc is a tool that helps users check if shadows are correct by showing sun movement and sunlight phases during the given day and time at the given location. And sometimes it helps to stitch together several keyframes to narrow down the location – you may check this great tutorial by Amnesty.

Video metadata and image forensics

Even though most social media platforms remove content metadata once someone uploads a video or an image, if you have the source video, you can use your computer’s native file browser or tools like Exiftool to examine the video’s metadata. Also, with tools like Amnesty International’s YouTube DataViewer you will be able to find out the exact day and time a video was uploaded on YouTube. If the above steps don’t yield confident results and you are still unsure of the video you can try out some more elaborate ways to assess its authenticity. With tools like the InVID-WeVerify plugin or FotoForensics you can examine an image or a video frame for manipulations with forensics algorithms like Error Level Analysis (ELA) and Double Quantization (DQ). The algorithms may reveal signs of manipulation, like editing, cropping, splicing or drawing. Nevertheless, to be able to understand the results and draw safe conclusions avoiding false-positives a level of familiarity with image forensics is required.

A critical mind and an eye for detail

As mentioned above, there is no golden rule on how to verify videos. The above steps are merely exhaustive, but they can be a good start. But as new methods of detection are developed, so are new manipulation methods – in a game that doesn’t seem to end. The commercialization of the technology behind deepfakes through openly accessible applications like Zao or Doublicat is making matters worse driving the “democratization of propaganda”. What remains most important and independent of the tools that can be used for the detection of manipulated media is to approach any kind of online information (especially user generated content) with a critical mind and an eye for detail. Traditional steps in the verification process, such as checking the source and triangulating all available information still remain central.

In the effort to tackle mis- and disinformation, collaboration is key. In Digger we work with Truly Media to provide journalists with a working environment where they can collaboratively verify online content. Truly Media is a collaborative platform developed by Athens Technology Center and Deutsche Welle that helps teams of users collect and organise content relevant to an investigation they are carrying out and together decide on how trustworthy the information they have found is. In order to make the verification process as easy as possible for journalists, Truly Media integrates a lot of the tools and processes mentioned above, while offering a set of image and video tools that aid users in the verification of multimedia content. Truly Media is a commercial platform – for a demo go here.

How to get started?

If you are a beginner in verification or if you would like to learn more about the whole verification process, we would suggest reading the first edition of the Verification Handbook, the Verification Handbook for Investigative Reporting, as well as the latest edition published in April 2020.

Stay tuned and get involved

We will publish regular updates about our technology, external developments and interview experts to learn about ethical, legal and hand-on expertise.

The Digger project is developing a community to share knowledge and initiate collaboration in the field of synthetic media detection. Interested? Follow us on Twitter @Digger_project and send us a DM or leave a comment below.

Related Content

Train Yourself – Sharpen Your Senses

Audio Synthesis, what’s next? – Parallel WaveGan

The Parallel WaveGAN is a neural vocoder producing high quality audio faster than real-time. Are personalized vocoders possible in the near future with this speed of progress?

In-Depth Interview – Jane Lytvynenko

We talked to Jane Lytvynenko, senior reporter with Buzzfeed News, focusing on online mis- and disinformation about how big the synthetic media problem actually is. Jane has three practical tips for us on how to detect deepfakes and how to handle disinformation.