Sam Gregory is Program Director of WITNESS, an organisation that works with people who use video to document human rights issues. WITNESS focuses on how people create trustworthy information that can expose abuses and address injustices. How is that connected to deepfakes?

Audio Synthesis, what’s next? – Parallel WaveGan

Audio Synthesis, what’s next? – Parallel WaveGan

The Parallel WaveGAN is a neural vocoder producing high quality audio faster than real-time. Are personalized vocoders possible in the near future with this speed of progress?

In our previous post of the “Audio Synthesis: What’s next?” series, we started talking about the latest advancement of audio synthesis. In this post we will introduce you to the Parallel WaveGAN network.

Not easy

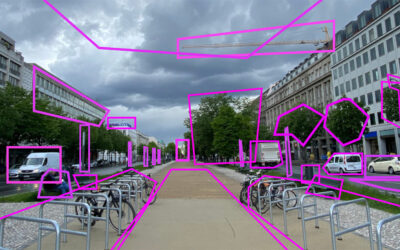

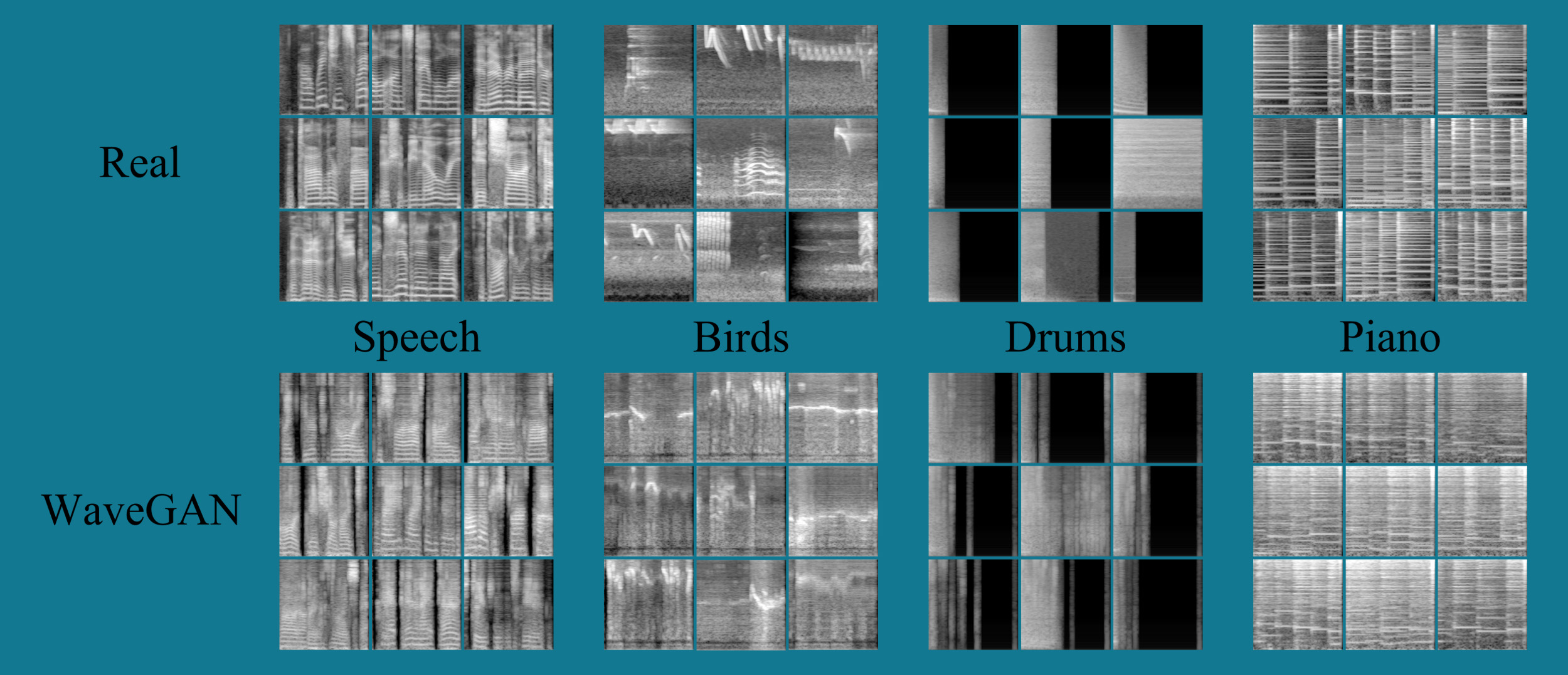

You probably already guessed that speech-to-text is actually a very HARD task. Let us tell you something: it is so hard that it has been split into two separate problems. Some researchers focused on translating the input text into a time-frequency representation. The spectrograms below for example, are generated by Tacotron-like networks. Other researchers focused on translating those pictures into proper audio files, sounding as natural as possible. Which is the general goal of “Neural Vocoders” as the Parallel WaveGAN itself.

Fast

The Parallel WaveGAN has been proposed by three researchers from the LINE (Japan) and NAVEL (South Korea) corporations, with the goal of improving the pre-existing neural vocoders. The researchers focused on one of the most demanding requirements of neural vocoders producing high quality audio in a “reasonable” amount of time. Their efforts were rewarded when they managed to achieve a system which could work faster than real-time and could be trained four times as fast as the competition.

Before & After

Before Parallel WaveGAN, to achieve this level of audio quality faster than real-time one had to spend at least 2 weeks training a neural vocoder on a very high-end GPU. After Parallel WaveGAN it is possible to create remarkable high quality audio files with only 3 days of training on the same high-end GPU as before. On top it is possible to produce content 28 times faster than real-time! With such speed of progress, sooner or later also consumer GPUs can be used to train such models. Which would mean that a new era of personalized vocoders is getting closer quickly.

If you are interested in what this sounds like, do not miss out on the audio examples produced by the Parallel WaveGAN, and have a look at the original paper:

Happy Digging and keep an eye on our future “Audio Synthesis: What’s next?” posts!

Don’t forget: be active and responsible in your community – and stay healthy!

Related Content

In-Depth Interview – Sam Gregory

Audio Synthesis, what’s next? – Parallel WaveGan

The Parallel WaveGAN is a neural vocoder producing high quality audio faster than real-time. Are personalized vocoders possible in the near future with this speed of progress?

In-Depth Interview – Jane Lytvynenko

We talked to Jane Lytvynenko, senior reporter with Buzzfeed News, focusing on online mis- and disinformation about how big the synthetic media problem actually is. Jane has three practical tips for us on how to detect deepfakes and how to handle disinformation.