Digger – Detecting Video Manipulation & Synthetic Media

What happens when we cannot trust what we see or hear anymore? First of all: don’t panic! Question the content: Could that be true? And when you are not 100 percent sure, do not share, but search for other media reports about it to double-check.

What happens when we cannot trust what we see or hear anymore? First of all: don’t panic! Question the content: Could that be true? And when you are not 100 percent sure, do not share, but search for other media reports about it to double-check.

How do professional journalists and human rights organisations do this? Every video out there could be manipulated. With video editing software anyone can edit a video.

Adobe After Effects gets content-aware fill to let you remove unwanted objects from videoshttps://t.co/VGeP3IuwDK pic.twitter.com/t4J5RCbjI1

— The Verge (@verge) April 3, 2019

It is challenging to verify content which has been edited, mislabeled or staged. What is even more complex is to verify content that has been modified. We roughly see two kinds of manipulation:

- Shallow fakes: manipulated audiovisual content (image, audio, video) generated with ‘low tech’ technologies like Cut & Paste or speed adjustments.

- Deepfakes: artificial (synthetic) audiovisual content (image, audio, video) generated with technologies like Machine Learning.

Deepfakes and synthetic media are some of the most feared things in journalism today. It is a term which describes audio and video files that have been created using artificial intelligence. Synthetic media is non-realistic media and often referred to as Deepfakes at the moment. Generated by algorithms it is possible to create or swap faces, places, and digital synthetic voices that realistically mimic human speech and face impressions but actually do not exist and aren´t real. That means machine-learning technology can fabricate a video with audio to make people do and say things they never did or said. These synthetic media can be extremely realistic and convincing but are actually artificial.

Detection of synthetic media

Face or body swapping, voice cloning and modifying the speed of a video is a new form of manipulating content and the technology is becoming widely accessible.

At the moment the real challenge are the so called shallow fakes. Remember the video where Nancy Pelosi appeared to be drunk during a speech. It turned out the video was just slowed down, but with the pitch turned up to cover up the manipulation. Video manipulation and creation of synthetic media is not the end of the truth but it makes us more cautious before using the content in our reporting.

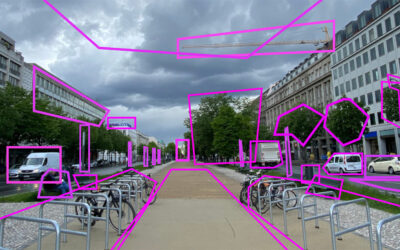

On the technology side it is a rat race. Forensic journalism can help detect altered media. DW´s Research & Cooperation team works together with ATC, a technology company from Greece and the Fraunhofer Institute for digital media technology to detect manipulation in videos.

Digger – Audio forensics

In the Digger project we focus on using audio forensics technologies to detect manipulation. Audio is an essential part of video and with a synthetic voice of a politician or the tampered noise of a gunshot a story can change completely. Digger aims to provide functionalities to detect audio tampering and manipulation in videos.

Our approach makes use of:

- Microphone analysis: Analysing the device being used for the recording of audio.

- Electrical network Frequency Analysis: Detect editing (cut & paste analyses) of audio.

- Codec Analysis: We follow the digital footprint of audio by extraction of ENF traces.

We're using partial audio matching to find near-duplicates. Seems like this manipulated Biden video is a clear case of taking a sentence out of context...#bidenvideo #desinformation https://t.co/eXFanEkkfF pic.twitter.com/q8jJ2Wi7q9— Digger - Deepfake Detection (@digger_project) March 9, 2020

Synthetic media in reality

Synthetic media technologies can have a positive as well as a negative impact on society.

It is exciting and scary at the same time to think about the ability to create audio-visual content in the way we want it and not in the way it exists in reality. Voice synthesis will allow us to speak in hundreds of languages in our own voice. (Hyperlink: Video David Beckham)

Or we could bring the master of surrealism back to life:

With the same technology you can also make politicians say something they never have or place people in scenes they have never been. These technologies are being used in pornography a lot but the unimaginable impact is also showcased in short clips in which actors are placed in films they have never acted in. Possibly one of the most harmful effects is that perpetrators can also easily claim “that’s a deepfake” in order to dismiss any contested information.

How can the authenticity of information be proofed reliably? This is exactly what we aim to address with our project Digger.

Stay tuned and get involved

We will publish regular updates about our technology, external developments and interview experts to learn about ethical, legal and hand-on expertise.

The Digger project is developing a community to share knowledge and initiate collaboration in the field of synthetic media detection. Interested? Follow us on Twitter @Digger_project and send us a DM or leave a comment below.

Related Content

Train Yourself – Sharpen Your Senses

Verification is not just about tools. Essential are our human senses. Whom can we trust, if not our own senses?

Audio Synthesis, what’s next? – Parallel WaveGan

The Parallel WaveGAN is a neural vocoder producing high quality audio faster than real-time. Are personalized vocoders possible in the near future with this speed of progress?

In-Depth Interview – Jane Lytvynenko

We talked to Jane Lytvynenko, senior reporter with Buzzfeed News, focusing on online mis- and disinformation about how big the synthetic media problem actually is. Jane has three practical tips for us on how to detect deepfakes and how to handle disinformation.

0 Comments